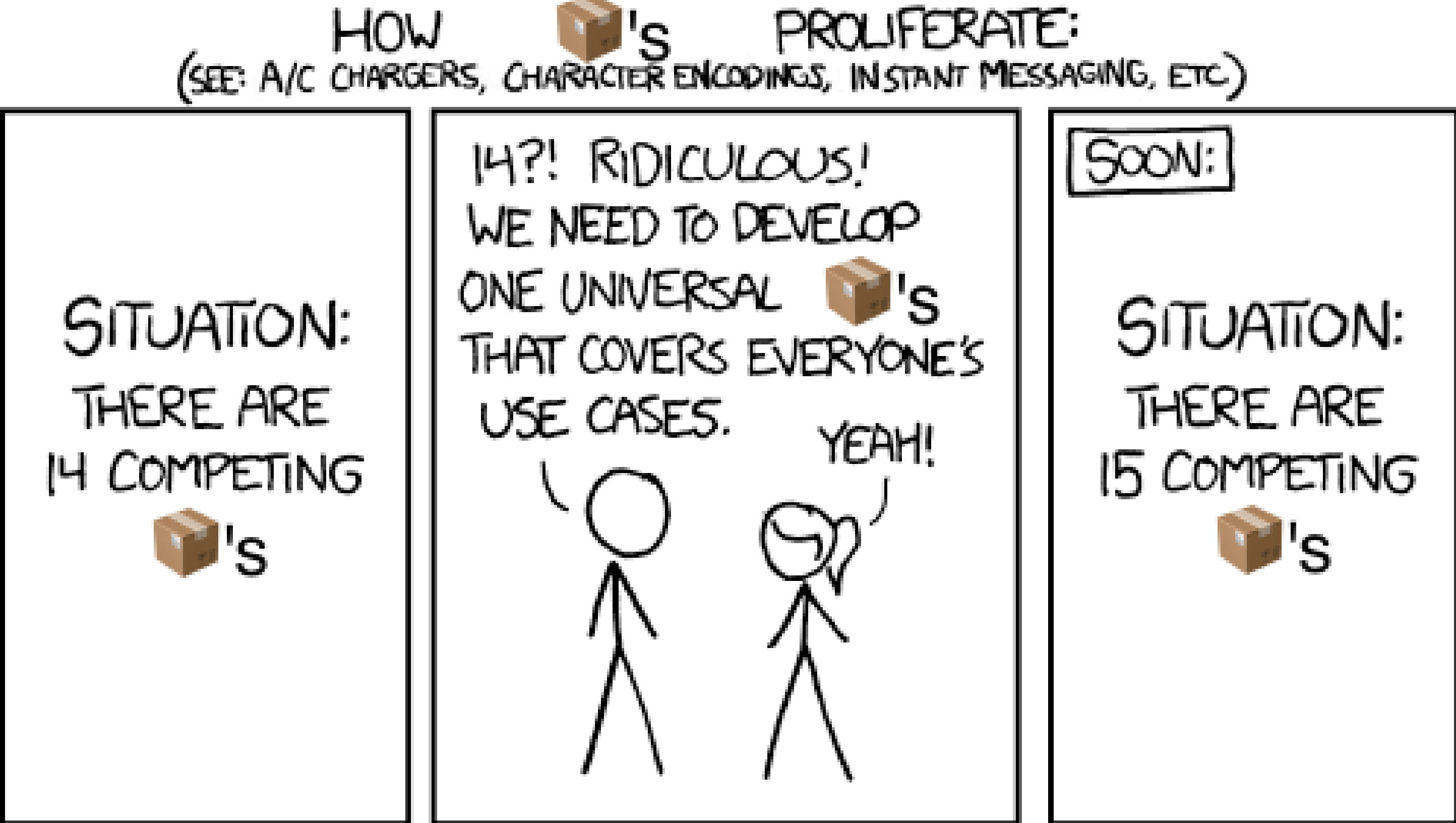

background-image: url(images/title.png) background-position: center background-size: cover <h1 id="text-preprocessing-in-r" style=" position: absolute; left: 5%; top: 15%; color: #c5d8d5; font-size: 60px; -webkit-text-stroke: 2px black; ">Text Preprocessing in R</h1> <h1 id="new-york-r" style=" position: absolute; right: 5%; top: 39%; color: #f6f4c6; font-size: 60px; -webkit-text-stroke: 2px black; ">New York R</h1> <h1 id="text-preprocessing-in-r" style=" position: absolute; left: 5%; top: 61%; color: #fbf1d4; font-size: 60px; -webkit-text-stroke: 2px black; ">Emil Hvitfeldt</h1> --- class: bg-right, bg1 <div style = "position:fixed; visibility: hidden"> `$$\require{color}\definecolor{orange}{rgb}{1, 0.603921568627451, 0.301960784313725}$$` `$$\require{color}\definecolor{blue}{rgb}{0.301960784313725, 0.580392156862745, 1}$$` `$$\require{color}\definecolor{pink}{rgb}{0.976470588235294, 0.301960784313725, 1}$$` </div> <script type="text/x-mathjax-config"> MathJax.Hub.Config({ TeX: { Macros: { orange: ["{\\color{orange}{#1}}", 1], blue: ["{\\color{blue}{#1}}", 1], pink: ["{\\color{pink}{#1}}", 1] }, loader: {load: ['[tex]/color']}, tex: {packages: {'[+]': ['color']}} } }); </script> <style> .orange {color: #FF9A4D;} .blue {color: #4D94FF;} .pink {color: #F94DFF;} </style> # About Me .pull-left.w80[ - Data Analyst at Teladoc Health - Adjunct Professor at American University teaching statistical machine learning using {tidymodels} - R package developer, almost a dozen packages CRAN (textrecipes, themis, paletteer, prismatic, textdata) - Co-author of "Supervised Machine Learning for Text Analysis in R" with Julia Silge - Located in sunny California - Has 3 cats; Presto, Oreo, and Wiggles ] --- background-image: url(images/cats.png) background-position: center background-size: contain --- class: bg-corners, bg1, middle .pull-right.w80[ .pull-left.w90[ <p style="font-size: 40pt;"> Most of data science is counting, and sometimes dividing </p> <cite>Hadley Wickham</cite> ] ] --- class: bg-corners, bg1, middle .pull-right.w80[ .pull-left.w90[ <p style="font-size: 40pt;"> Most of <s>data science</s> <b>text preprocessing</b> is counting, and sometimes dividing </p> <cite><s>Hadley Wickham</s> Emil Hvitfeldt</cite> ] ] --- class: bg-full, bg1, middle, center <div style="font-size: 80pt;"> What are we counting? </div> <style type="text/css"> .animal { font-size: 31pt; } .hl1 { text-decoration: underline; text-decoration-color: #FF9A4D; } .hl2 { text-decoration: underline; text-decoration-color: #F94DFF; } </style> --- class: bg-corners, bg1 .animal[ Beavers are most well known for their distinctive home-building that can be seen in rivers and streams. The beavers dam is built from twigs, sticks, leaves and mud and are surprisingly strong. Here the beavers can catch their food and swim in the water. Beavers are nocturnal animals existing in the forests of Europe and North America (the Canadian beaver is the most common beaver). Beavers use their large, flat shaped tails, to help with dam building and it also allows the beavers to swim at speeds of up to 30 knots per hour. The beaver's significance is acknowledged in Canada by the fact that there is a Canadian Beaver on one of their coins. ] --- class: bg-corners, bg1 .animal[ .hl1[Beavers are most well known for their distinctive home-building that can be seen in rivers and streams.] .hl2[The beavers dam is built from twigs, sticks, leaves and mud and are surprisingly strong.] .hl1[Here the beavers can catch their food and swim in the water.] .hl2[Beavers are nocturnal animals existing in the forests of Europe and North America (the Canadian beaver is the most common beaver).] .hl1[Beavers use their large, flat shaped tails, to help with dam building and it also allows the beavers to swim at speeds of up to 30 knots per hour.] .hl2[The beaver's significance is acknowledged in Canada by the fact that there is a Canadian Beaver on one of their coins.] ] --- class: bg-corners, bg1 .animal[ .hl1[B].hl2[e].hl1[a].hl2[v].hl1[e].hl2[r].hl1[s] .hl1[a].hl2[r].hl1[e] .hl1[m].hl2[o].hl1[s].hl2[t] .hl2[w].hl1[e].hl2[l].hl1[l] .hl1[k].hl2[n].hl1[o].hl2[w].hl1[n] .hl1[f].hl2[o].hl1[r] .hl1[t].hl2[h].hl1[e].hl2[i].hl1[r] .hl1[d].hl2[i].hl1[s].hl2[t].hl1[i].hl2[n].hl1[c].hl2[t].hl1[i].hl2[v].hl1[e] .hl1[h].hl2[o].hl1[m].hl2[e].hl1[-].hl2[b].hl1[u].hl2[i].hl1[l].hl2[d].hl1[i].hl2[n].hl1[g] .hl1[t].hl2[h].hl1[a].hl2[t] .hl2[c].hl1[a].hl2[n] .hl2[b].hl1[e] .hl1[s].hl2[e].hl1[e].hl2[n] .hl2[i].hl1[n] .hl1[r].hl2[i].hl1[v].hl2[e].hl1[r].hl2[s] .hl2[a].hl1[n].hl2[d] .hl2[s].hl1[t].hl2[r].hl1[e].hl2[a].hl1[m].hl2[s].hl1[.] .hl1[T].hl2[h].hl1[e] .hl1[b].hl2[e].hl1[a].hl2[v].hl1[e].hl2[r].hl1[s] .hl1[d].hl2[a].hl1[m] .hl1[i].hl2[s] .hl2[b].hl1[u].hl2[i].hl1[l].hl2[t] .hl2[f].hl1[r].hl2[o].hl1[m] .hl1[t].hl2[w].hl1[i].hl2[g].hl1[s].hl2[,] .hl2[s].hl1[t].hl2[i].hl1[c].hl2[k].hl1[s].hl2[,] .hl2[l].hl1[e].hl2[a].hl1[v].hl2[e].hl1[s] .hl1[a].hl2[n].hl1[d] .hl1[m].hl2[u].hl1[d] .hl1[a].hl2[n].hl1[d] .hl1[a].hl2[r].hl1[e] .hl1[s].hl2[u].hl1[r].hl2[p].hl1[r].hl2[i].hl1[s].hl2[i].hl1[n].hl2[g].hl1[l].hl2[y] .hl2[s].hl1[t].hl2[r].hl1[o].hl2[n].hl1[g].hl2[.] .hl2[H].hl1[e].hl2[r].hl1[e] .hl1[t].hl2[h].hl1[e] .hl1[b].hl2[e].hl1[a].hl2[v].hl1[e].hl2[r].hl1[s] .hl1[c].hl2[a].hl1[n] .hl1[c].hl2[a].hl1[t].hl2[c].hl1[h] .hl1[t].hl2[h].hl1[e].hl2[i].hl1[r] .hl1[f].hl2[o].hl1[o].hl2[d] .hl2[a].hl1[n].hl2[d] .hl2[s].hl1[w].hl2[i].hl1[m] .hl1[i].hl2[n] .hl2[t].hl1[h].hl2[e] .hl2[w].hl1[a].hl2[t].hl1[e].hl2[r].hl1[.] .hl1[B].hl2[e].hl1[a].hl2[v].hl1[e].hl2[r].hl1[s] .hl1[a].hl2[r].hl1[e] .hl1[n].hl2[o].hl1[c].hl2[t].hl1[u].hl2[r].hl1[n].hl2[a].hl1[l] .hl1[a].hl2[n].hl1[i].hl2[m].hl1[a].hl2[l].hl1[s] .hl1[e].hl2[x].hl1[i].hl2[s].hl1[t].hl2[i].hl1[n].hl2[g] .hl2[i].hl1[n] .hl1[t].hl2[h].hl1[e] .hl1[f].hl2[o].hl1[r].hl2[e].hl1[s].hl2[t].hl1[s] .hl1[o].hl2[f] .hl2[E].hl1[u].hl2[r].hl1[o].hl2[p].hl1[e] .hl1[a].hl2[n].hl1[d] .hl1[N].hl2[o].hl1[r].hl2[t].hl1[h] .hl1[A].hl2[m].hl1[e].hl2[r].hl1[i].hl2[c].hl1[a] .hl1[(].hl2[t].hl1[h].hl2[e] .hl2[C].hl1[a].hl2[n].hl1[a].hl2[d].hl1[i].hl2[a].hl1[n] .hl1[b].hl2[e].hl1[a].hl2[v].hl1[e].hl2[r] .hl2[i].hl1[s] .hl1[t].hl2[h].hl1[e] .hl1[m].hl2[o].hl1[s].hl2[t] .hl2[c].hl1[o].hl2[m].hl1[m].hl2[o].hl1[n] .hl1[b].hl2[e].hl1[a].hl2[v].hl1[e].hl2[r].hl1[)].hl2[.] .hl2[B].hl1[e].hl2[a].hl1[v].hl2[e].hl1[r].hl2[s] .hl2[u].hl1[s].hl2[e] .hl2[t].hl1[h].hl2[e].hl1[i].hl2[r] .hl2[l].hl1[a].hl2[r].hl1[g].hl2[e].hl1[,] .hl1[f].hl2[l].hl1[a].hl2[t] .hl2[s].hl1[h].hl2[a].hl1[p].hl2[e].hl1[d] .hl1[t].hl2[a].hl1[i].hl2[l].hl1[s].hl2[,] .hl2[t].hl1[o] .hl1[h].hl2[e].hl1[l].hl2[p] .hl2[w].hl1[i].hl2[t].hl1[h] .hl1[d].hl2[a].hl1[m] .hl1[b].hl2[u].hl1[i].hl2[l].hl1[d].hl2[i].hl1[n].hl2[g] .hl2[a].hl1[n].hl2[d] .hl2[i].hl1[t] .hl1[a].hl2[l].hl1[s].hl2[o] .hl2[a].hl1[l].hl2[l].hl1[o].hl2[w].hl1[s] .hl1[t].hl2[h].hl1[e] .hl1[b].hl2[e].hl1[a].hl2[v].hl1[e].hl2[r].hl1[s] .hl1[t].hl2[o] .hl2[s].hl1[w].hl2[i].hl1[m] .hl1[a].hl2[t] .hl2[s].hl1[p].hl2[e].hl1[e].hl2[d].hl1[s] .hl1[o].hl2[f] .hl2[u].hl1[p] .hl1[t].hl2[o] .hl2[3].hl1[0] .hl1[k].hl2[n].hl1[o].hl2[t].hl1[s] .hl1[p].hl2[e].hl1[r] .hl1[h].hl2[o].hl1[u].hl2[r].hl1[.] .hl1[T].hl2[h].hl1[e] .hl1[b].hl2[e].hl1[a].hl2[v].hl1[e].hl2[r].hl1['].hl2[s] .hl2[s].hl1[i].hl2[g].hl1[n].hl2[i].hl1[f].hl2[i].hl1[c].hl2[a].hl1[n].hl2[c].hl1[e] .hl1[i].hl2[s] .hl2[a].hl1[c].hl2[k].hl1[n].hl2[o].hl1[w].hl2[l].hl1[e].hl2[d].hl1[g].hl2[e].hl1[d] .hl1[i].hl2[n] .hl2[C].hl1[a].hl2[n].hl1[a].hl2[d].hl1[a] .hl1[b].hl2[y] .hl2[t].hl1[h].hl2[e] .hl2[f].hl1[a].hl2[c].hl1[t] .hl1[t].hl2[h].hl1[a].hl2[t] .hl2[t].hl1[h].hl2[e].hl1[r].hl2[e] .hl2[i].hl1[s] .hl1[a] .hl1[C].hl2[a].hl1[n].hl2[a].hl1[d].hl2[i].hl1[a].hl2[n] .hl2[B].hl1[e].hl2[a].hl1[v].hl2[e].hl1[r] .hl1[o].hl2[n] .hl2[o].hl1[n].hl2[e] .hl2[o].hl1[f] .hl1[t].hl2[h].hl1[e].hl2[i].hl1[r] .hl1[c].hl2[o].hl1[i].hl2[n].hl1[s].hl2[.] ] --- class: bg-corners, bg1 .animal[ .hl1[Beavers] .hl2[are] .hl1[most] .hl2[well] .hl1[known] .hl2[for] .hl1[their] .hl2[distinctive] .hl1[home-building] .hl2[that] .hl1[can] .hl2[be] .hl1[seen] .hl2[in] .hl1[rivers] .hl2[and] .hl1[streams.] .hl2[The] .hl1[beavers] .hl2[dam] .hl1[is] .hl2[built] .hl1[from] .hl2[twigs,] .hl1[sticks,] .hl2[leaves] .hl1[and] .hl2[mud] .hl1[and] .hl2[are] .hl1[surprisingly] .hl2[strong.] .hl1[Here] .hl2[the] .hl1[beavers] .hl2[can] .hl1[catch] .hl2[their] .hl1[food] .hl2[and] .hl1[swim] .hl2[in] .hl1[the] .hl2[water.] .hl1[Beavers] .hl2[are] .hl1[nocturnal] .hl2[animals] .hl1[existing] .hl2[in] .hl1[the] .hl2[forests] .hl1[of] .hl2[Europe] .hl1[and] .hl2[North] .hl1[America] .hl2[(the] .hl1[Canadian] .hl2[beaver] .hl1[is] .hl2[the] .hl1[most] .hl2[common] .hl1[beaver).] .hl2[Beavers] .hl1[use] .hl2[their] .hl1[large,] .hl2[flat] .hl1[shaped] .hl2[tails,] .hl1[to] .hl2[help] .hl1[with] .hl2[dam] .hl1[building] .hl2[and] .hl1[it] .hl2[also] .hl1[allows] .hl2[the] .hl1[beavers] .hl2[to] .hl1[swim] .hl2[at] .hl1[speeds] .hl2[of] .hl1[up] .hl2[to] .hl1[30] .hl2[knots] .hl1[per] .hl2[hour.] .hl1[The] .hl2[beaver's] .hl1[significance] .hl2[is] .hl1[acknowledged] .hl2[in] .hl1[Canada] .hl2[by] .hl1[the] .hl2[fact] .hl1[that] .hl2[there] .hl1[is] .hl2[a] .hl1[Canadian] .hl2[Beaver] .hl1[on] .hl2[one] .hl1[of] .hl2[their] .hl1[coins.] ] --- class: bg-corners, bg1, center, middle # Disclaimer -- I'll show examples in English -- English is not the only language out there #BenderRule -- The difficulty of different tasks vary from language to language -- langauge != text --- class: bg-left, bg1 .pull-right.w80[ # Goal Turn .blue[text] into .pink[numbers] <br> turning the .blue[text] into .pink[something machine readable] there *will* be a loss along the way the same way there is a loss from speech to text ] --- class: bg-left, bg1, middle .pull-right.w80[ .pull-left.w90[ <p style="font-size: 40pt;"> What I'll be talking about will be langauge/implementatation agnostic </p> ] ] --- class: bg-corners, bg1 .center[ # Existing packages ] .pull-left[ .center[ ## tidytext ] great for EDA and topic modeling ] .pull-right[ .center[ ## quanteda ] Whole ecosystem, end to end ] --- class: bg-corners, bg1 .center[ ## {textrecipes} ] .pull-left[ - strictly text preprocessing / feature engineering - part of recipes/tidymodels - doesn't create any custom object - It doesn't restrict us to only use text as features ] .pull-right[ .center[  ] ] --- .center[  ] --- class: bg-right, bg1, middle ## tidytext doesn't work <br> ## quanteda is its own ecosystem <br> ##learn transformation and apply to new data --- class: bg-corners, bg1 .pull-right.w80[ # Scope We are limiting this to tabular data I would rather get a good foundation then work with the cutting edge ] --- # Full recipe ```r library(animals) library(recipes) library(textrecipes) rec_spec <- recipe(diet ~ ., data = animals) %>% step_novel(lifestyle) %>% step_unknown(lifestyle) %>% step_other(lifestyle, threshold = 0.01) %>% step_dummy(lifestyle) %>% step_log(mean_weight) %>% step_impute_mean(mean_weight) %>% step_text_normalization(text) %>% step_tokenize(text) %>% step_stopwords(text) %>% step_tokenfilter(text, max_tokens = 500, min_times = 5) %>% step_tfidf(text) ``` --- # Full recipe ```r library(animals) library(recipes) library(textrecipes) rec_spec <- recipe(diet ~ ., data = animals) %>% * step_novel(lifestyle) %>% * step_unknown(lifestyle) %>% * step_other(lifestyle, threshold = 0.01) %>% * step_dummy(lifestyle) %>% * step_log(mean_weight) %>% * step_impute_mean(mean_weight) %>% step_text_normalization(text) %>% step_tokenize(text) %>% step_stopwords(text) %>% step_tokenfilter(text, max_tokens = 500, min_times = 5) %>% step_tfidf(text) ``` --- # Full recipe ```r library(animals) library(recipes) library(textrecipes) rec_spec <- recipe(diet ~ ., data = animals) %>% step_novel(lifestyle) %>% step_unknown(lifestyle) %>% step_other(lifestyle, threshold = 0.01) %>% step_dummy(lifestyle) %>% step_log(mean_weight) %>% step_impute_mean(mean_weight) %>% * step_text_normalization(text) %>% * step_tokenize(text) %>% * step_stopwords(text) %>% * step_tokenfilter(text, max_tokens = 500, min_times = 5) %>% * step_tfidf(text) ``` --- class: bg-full, bg2, middle, center <div style="font-size: 110pt;"> TOKENIZATION </div> --- class: bg-corners, bg2, middle, right <div style="font-size: 50pt;"> we want to take a blob of text and turn it into something smaller </div> <br> .left[ <div style="font-size: 50pt;"> something that we can count </div> ] --- class: bg-right, bg2 # Tokenization .pull-left.w80[ - An essential part of most text analyses - Most common token == word, but sometimes we tokenize in a different way - Many options to take into consideration We are extremely fortunate that splitting by .pink[white-space] works as a good baseline for English ] --- class: bg-corners, bg2 # White spaces tokenization ```r strsplit(beaver, "\\s")[[1]] ``` ``` ## [1] "Beavers" "are" "most" "well" ## [5] "known" "for" "their" "distinctive" ## [9] "home-building" "that" "can" "be" ## [13] "seen" "in" "rivers" "and" ## [17] "streams." "The" "beavers" "dam" ## [21] "is" "built" "from" "twigs," ## [25] "sticks," "leaves" "and" "mud" ## [29] "and" "are" "surprisingly" "strong." ## [33] "Here" "the" "beavers" "can" ## [37] "catch" "their" "food" "and" ## [41] "swim" "in" "the" "water." ## [45] "Beavers" "are" "nocturnal" "animals" ## [49] "existing" "in" "the" "forests" ## [53] "of" "Europe" "and" "North" ## [57] "America" "(the" "Canadian" "beaver" ## [61] "is" "the" "most" "common" ## [65] "beaver)." "Beavers" "use" "their" ## [69] "large," "flat" "shaped" "tails," ## [73] "to" "help" "with" "dam" ## [77] "building" "and" "it" "also" ## [81] "allows" "the" "beavers" "to" ## [85] "swim" "at" "speeds" "of" ## [89] "up" "to" "30" "knots" ## [93] "per" "hour." "The" "beaver's" ## [97] "significance" "is" "acknowledged" "in" ## [101] "Canada" "by" "the" "fact" ## [105] "that" "there" "is" "a" ## [109] "Canadian" "Beaver" "on" "one" ## [113] "of" "their" "coins." "The" ## [117] "beaver" "colonies" "create" "one" ## [121] "or" "more" "dams" "in" ## [125] "the" "beaver" "colonies'" "habitat" ## [129] "to" "provide" "still," "deep" ## [133] "water" "to" "protect" "the" ## [137] "beavers" "against" "predators." "The" ## [141] "beavers" "also" "use" "the" ## [145] "deep" "water" "created" "using" ## [149] "beaver" "dams" "and" "to" ## [153] "float" "food" "and" "building" ## [157] "materials" "along" "the" "river." ## [161] "In" "1988" "the" "North" ## [165] "American" "beaver" "population" "was" ## [169] "60-400" "million." "Recent" "studies" ## [173] "have" "estimated" "there" "are" ## [177] "now" "around" "6-12" "million" ## [181] "beavers" "found" "in" "the" ## [185] "wild." "The" "decline" "in" ## [189] "beaver" "populations" "is" "due" ## [193] "to" "the" "beavers" "being" ## [197] "hunted" "for" "their" "fur" ## [201] "and" "for" "the" "beaver's" ## [205] "glands" "that" "are" "used" ## [209] "as" "medicine" "and" "perfume." ## [213] "The" "beaver" "is" "also" ## [217] "hunted" "because" "the" "beavers" ## [221] "harvesting" "of" "trees" "and" ## [225] "the" "beavers" "flooding" "of" ## [229] "waterways" "may" "interfere" "with" ## [233] "other" "human" "land" "uses." ## [237] "Beavers" "are" "known" "for" ## [241] "their" "danger" "signal" "which" ## [245] "the" "beaver" "makes" "when" ## [249] "the" "beaver" "is" "startled" ## [253] "or" "frightened." "A" "swimming" ## [257] "beaver" "will" "rapidly" "dive" ## [261] "while" "forcefully" "slapping" "the" ## [265] "water" "with" "its" "broad" ## [269] "tail." "This" "means" "that" ## [273] "the" "beaver" "creates" "a" ## [277] "loud" "slapping" "noise," "which" ## [281] "can" "be" "heard" "over" ## [285] "large" "distances" "above" "and" ## [289] "below" "water." "This" "beaver" ## [293] "warning" "noise" "serves" "as" ## [297] "a" "warning" "to" "beavers" ## [301] "in" "the" "area." "Once" ## [305] "a" "beaver" "has" "made" ## [309] "this" "danger" "signal," "nearby" ## [313] "beavers" "dive" "and" "may" ## [317] "not" "come" "back" "up" ## [321] "for" "some" "time." "Beavers" ## [325] "are" "slow" "on" "land," ## [329] "but" "the" "beavers" "are" ## [333] "good" "swimmers" "that" "can" ## [337] "stay" "under" "water" "for" ## [341] "as" "long" "as" "15" ## [345] "minutes" "at" "a" "time." ## [349] "In" "the" "winter" "the" ## [353] "beaver" "does" "not" "hibernate" ## [357] "but" "instead" "stores" "sticks" ## [361] "and" "logs" "underwater" "that" ## [365] "the" "beaver" "can" "then" ## [369] "feed" "on" "through" "the" ## [373] "cold" "winter." ``` --- class: bg-corners, bg2 # Tokenization: {tokenizers} package ```r tokenizers::tokenize_words(animals$text[74]) ``` ``` ## [[1]] ## [1] "beavers" "are" "most" "well" "known" ## [6] "for" "their" "distinctive" "home" "building" ## [11] "that" "can" "be" "seen" "in" ## [16] "rivers" "and" "streams" "the" "beavers" ## [21] "dam" "is" "built" "from" "twigs" ## [26] "sticks" "leaves" "and" "mud" "and" ## [31] "are" "surprisingly" "strong" "here" "the" ## [36] "beavers" "can" "catch" "their" "food" ## [41] "and" "swim" "in" "the" "water" ## [46] "beavers" "are" "nocturnal" "animals" "existing" ## [51] "in" "the" "forests" "of" "europe" ## [56] "and" "north" "america" "the" "canadian" ## [61] "beaver" "is" "the" "most" "common" ## [66] "beaver" "beavers" "use" "their" "large" ## [71] "flat" "shaped" "tails" "to" "help" ## [76] "with" "dam" "building" "and" "it" ## [81] "also" "allows" "the" "beavers" "to" ## [86] "swim" "at" "speeds" "of" "up" ## [91] "to" "30" "knots" "per" "hour" ## [96] "the" "beaver's" "significance" "is" "acknowledged" ## [101] "in" "canada" "by" "the" "fact" ## [106] "that" "there" "is" "a" "canadian" ## [111] "beaver" "on" "one" "of" "their" ## [116] "coins" "the" "beaver" "colonies" "create" ## [121] "one" "or" "more" "dams" "in" ## [126] "the" "beaver" "colonies" "habitat" "to" ## [131] "provide" "still" "deep" "water" "to" ## [136] "protect" "the" "beavers" "against" "predators" ## [141] "the" "beavers" "also" "use" "the" ## [146] "deep" "water" "created" "using" "beaver" ## [151] "dams" "and" "to" "float" "food" ## [156] "and" "building" "materials" "along" "the" ## [161] "river" "in" "1988" "the" "north" ## [166] "american" "beaver" "population" "was" "60" ## [171] "400" "million" "recent" "studies" "have" ## [176] "estimated" "there" "are" "now" "around" ## [181] "6" "12" "million" "beavers" "found" ## [186] "in" "the" "wild" "the" "decline" ## [191] "in" "beaver" "populations" "is" "due" ## [196] "to" "the" "beavers" "being" "hunted" ## [201] "for" "their" "fur" "and" "for" ## [206] "the" "beaver's" "glands" "that" "are" ## [211] "used" "as" "medicine" "and" "perfume" ## [216] "the" "beaver" "is" "also" "hunted" ## [221] "because" "the" "beavers" "harvesting" "of" ## [226] "trees" "and" "the" "beavers" "flooding" ## [231] "of" "waterways" "may" "interfere" "with" ## [236] "other" "human" "land" "uses" "beavers" ## [241] "are" "known" "for" "their" "danger" ## [246] "signal" "which" "the" "beaver" "makes" ## [251] "when" "the" "beaver" "is" "startled" ## [256] "or" "frightened" "a" "swimming" "beaver" ## [261] "will" "rapidly" "dive" "while" "forcefully" ## [266] "slapping" "the" "water" "with" "its" ## [271] "broad" "tail" "this" "means" "that" ## [276] "the" "beaver" "creates" "a" "loud" ## [281] "slapping" "noise" "which" "can" "be" ## [286] "heard" "over" "large" "distances" "above" ## [291] "and" "below" "water" "this" "beaver" ## [296] "warning" "noise" "serves" "as" "a" ## [301] "warning" "to" "beavers" "in" "the" ## [306] "area" "once" "a" "beaver" "has" ## [311] "made" "this" "danger" "signal" "nearby" ## [316] "beavers" "dive" "and" "may" "not" ## [321] "come" "back" "up" "for" "some" ## [326] "time" "beavers" "are" "slow" "on" ## [331] "land" "but" "the" "beavers" "are" ## [336] "good" "swimmers" "that" "can" "stay" ## [341] "under" "water" "for" "as" "long" ## [346] "as" "15" "minutes" "at" "a" ## [351] "time" "in" "the" "winter" "the" ## [356] "beaver" "does" "not" "hibernate" "but" ## [361] "instead" "stores" "sticks" "and" "logs" ## [366] "underwater" "that" "the" "beaver" "can" ## [371] "then" "feed" "on" "through" "the" ## [376] "cold" "winter" ``` --- class: bg-right, bg2 # word boundary algorithm (ICU) <div style="font-size: 11pt;"> - Break at the start and end of text, unless the text is empty. - Do not break within CRLF (new line characters). - Otherwise, break before and after new lines (including CR and LF). - Do not break within emoji zwj sequences. - Keep horizontal whitespace together. - Ignore Format and Extend characters, except after sot, CR, LF, and new line. - Do not break between most letters. - Do not break letters across certain punctuation. - Do not break within sequences of digits, or digits adjacent to letters (“3a,” or “A3”). - Do not break within sequences, such as “3.2” or “3,456.789.” - Do not break between Katakana. - Do not break from extenders. - Do not break within emoji flag sequences. - Otherwise, break everywhere (including around ideographs). ??? finding word boundaries according to the specification from the International Components for Unicode (ICU) --- class: bg-right, bg2 # Tokenization considerations - Should we turn UPPERCASE letters to lowercase? -- - How should we handle punctuation⁉️ -- - What about non-word characters .blue[inside] words? -- - Should compound words be split or multi-word ideas be kept together? --- class: bg-corners, bg2 .pull-right.w80[ # Problems ```r table(c("flowers", "bush", "flowers")) ``` ] --- class: bg-corners, bg2 .pull-right.w80[ # Problems ```r table(c("flowers", "bush", "flowers")) ``` ``` ## ## bush flowers flowers ## 1 1 1 ``` ] --- class: bg-corners, bg2 .pull-right.w80[ # Problems ```r tokenize_characters("flowers") ``` ``` ## [[1]] ## [1] "fl" "o" "w" "e" "r" "s" ``` Ligatures can sneak in everywhere! ] --- class: bg-left, bg2 .pull-right.w80[ # Problems This doesn't even begin to describe the difference between slang and domain knowledge - wow - wooow - wooooow - woooooooooow!! the same word? are they different enough? ] --- class: bg-right, bg2 # Problems What about emojis? Are emojis words? - Lets get some some 🌮s - I love you ❤️ --- class: bg-full, bg2, middle, center <div style="font-size: 100pt;"> The domain you are in matters! </div> --- class: bg-corners, bg2 .pull-right.w90[ # {textrecipes} {textrecipes} realizes that there are millions of ways to tokenize and won't tie you down to one. Defaults to {tokenizers} But you can pass in your own tokenizer There are even bindings to other packages/languages spacyr, tokenizers.bpe, udpipe with more to come ] --- class: bg-right, bg2 # Default {tokenizers} .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) ``` ] --- class: bg-right, bg2 # Default {tokenizers} .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text, options = list(strip_punct = FALSE, lowercase = FALSE)) ``` ] --- class: bg-right, bg2 # Custom tokenizer .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text, custom_token = my_amazing_tokenizer) ``` ] --- class: bg-right, bg2 # spacy via {spacyr} .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text, engine = "spacyr") ``` ] --- class: bg-right, bg2 # {tokenizers.bpe} .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text, engine = "tokenizers.bpe", training_options = list(vocab_size = 1000)) ``` ] --- class: bg-right, bg2 # {udpipe} .pull-left.w80[ ```r library(udpipe) udmodel <- udpipe_download_model(language = "english") rec <- recipe(~ text, data = animals) %>% step_tokenize(text, engine = "udpipe", training_options = list(model = udmodel)) ``` ] --- class: bg-full, bg3, middle, center <div style="font-size: 150pt;"> STEMMING </div> --- class: bg-full, bg3, middle, right <div style="font-size: 100pt;"> Act of modifying tokens once they have become tokens </div> --- class: bg-right, bg3, middle <div style="font-size: 70pt;"> - Porter Stemmer - Ending s removal --- class: bg-left, bg3, middle .pull-right.w80[ <div style="font-size: 50pt;"> We are again combining buckets in the hope that they can be treated equally </div> ] --- class: bg-right, bg3 # Stemming Example ``` ## # A tibble: 8 x 4 ## `Original word` `Remove S` `Plural endings` `Porter stemming` ## <chr> <chr> <chr> <chr> ## 1 distinctive distinctive distinctive distinct ## 2 building building building build ## 3 surprisingly surprisingly surprisingly surprisingli ## 4 animals animal animal anim ## 5 beaver beaver beaver beaver ## 6 significance significance significance signific ## 7 colonies colonie colony coloni ## 8 studies studie study studi ``` --- class: bg-right, bg3 # Default {SnowballC} .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_stem(text) ``` ] --- class: bg-right, bg3 # Custom Stemming function .pull-left.w80[ ```r remove_s <- function(x) gsub("s$", "", x) rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_stem(text, custom_stemmer = remove_s) ``` ] --- class: bg-corners, bg3 .pull-right.w90[ # Lemmatization Works a little stronger then stemming, will take a little while longer to run Implementations: - spacyr - udpipe ] --- class: bg-right, bg3 # spacy lemmatization .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text, engine = "spacyr") %>% step_lemma(text) ``` ] --- class: bg-full, bg4, middle, center <div style="font-size: 200pt;"> STOP WORDS </div> --- class: bg-corners, bg4 .center[ # Definitions from the Web ] -- > "In natural language processing, useless words (data), are referred to as stop words." <br> -- > "In computing, stop words are words that are filtered out before or after the natural language data (text) are processed." <br> -- > "Stopwords are the words in any language which does not add much meaning to a sentence. They can safely be ignored without sacrificing the meaning of the sentence" --- class: bg-swirl, bg4 .center[ <span, style = 'font-size:400px;'>🤔</span> ] --- class: bg-full, bg4, middle, right <div style="font-size: 70pt;"> this gives the illusion that stop words are easy to work with and are without problems </div> --- class: bg-corners, bg4 .right[ <div style="font-size: 50pt;"> what is stop words really? </div> ] <br> <div style="font-size: 40pt;"> Low information words that contribute little value to task </div> <br> <div style="font-size: 40pt;"> The information of words lives on a continuum </div> --- .pull-left[ ## Word information Each rectangle represents a word in 1 document We will illustrate the information that word carries with color. <span, style = 'color:#3E049CFF;'>low information words</span> <span, style = 'color:#FCCD25FF;'>high information words</span> ] .pull-right[ <img src="index_files/figure-html/unnamed-chunk-22-1.png" width="80%" style="display: block; margin: auto;" /> ] --- .pull-left[ ## Word information Uniform information If this was true then it would hurt to remove any words # 👎 ] .pull-right[ <img src="index_files/figure-html/unnamed-chunk-23-1.png" width="80%" style="display: block; margin: auto;" /> ] --- .pull-left[ ## Word information Random information No way to figure out which words to remove # 👎 ] .pull-right[ <img src="index_files/figure-html/unnamed-chunk-24-1.png" width="80%" style="display: block; margin: auto;" /> ] --- .pull-left[ ## Word information Random information No way to figure out which words to remove # 👎 ] .pull-right[ <img src="index_files/figure-html/unnamed-chunk-25-1.png" width="80%" style="display: block; margin: auto;" /> ] --- .pull-left[ ## Word information High variance information (diamonds in the rough) Few words have a lot of information most words have no information # 👍 ] .pull-right[ <img src="index_files/figure-html/unnamed-chunk-26-1.png" width="80%" style="display: block; margin: auto;" /> ] --- .pull-left[ ## Word information High variance information (diamonds in the rough) Few words have a lot of information most words have no information # 👍 ] .pull-right[ <img src="index_files/figure-html/unnamed-chunk-27-1.png" width="80%" style="display: block; margin: auto;" /> ] --- .pull-left[ ## Word information Low variance information Smooth transition between low and high information words # 👍 ] .pull-right[ <img src="index_files/figure-html/unnamed-chunk-28-1.png" width="80%" style="display: block; margin: auto;" /> ] --- .pull-left[ ## Word information Low variance information Smooth transition between low and high information words # 👍 ] .pull-right[ <img src="index_files/figure-html/unnamed-chunk-29-1.png" width="80%" style="display: block; margin: auto;" /> ] --- .center[ # Information distribution ] <img src="index_files/figure-html/unnamed-chunk-30-1.png" width="700px" style="display: block; margin: auto;" /> --- .center[ # Information distribution ] <img src="index_files/figure-html/unnamed-chunk-31-1.png" width="700px" style="display: block; margin: auto;" /> --- .center[ # Information distribution ] <img src="index_files/figure-html/unnamed-chunk-32-1.png" width="700px" style="display: block; margin: auto;" /> --- .center[ # Information distribution ] <img src="index_files/figure-html/unnamed-chunk-33-1.png" width="700px" style="display: block; margin: auto;" /> --- .center[ # Information distribution ] <img src="index_files/figure-html/unnamed-chunk-34-1.png" width="700px" style="display: block; margin: auto;" /> --- class: bg-right, bg4 # How can we handle this - pre-made lists - homemade list --- class: bg-right, bg4 # Premade list I have talked about stop words as if there is only a handful lists out there And each list is well constructed --- class: bg-corners, bg4 # English stop word lists .pull-left[ - Galago (forumstop) - EBSCOhost - CoreNLP (Hardcoded) - Ranks NL (Google) - Lucene, Solr, Elastisearch - MySQL (InnoDB) - Ovid (Medical information services) ] .pull-right[ - Bow (libbow, rainbow, arrow, crossbow) - LingPipe - Vowpal Wabbit (doc2lda) - Text Analytics 101 - LexisNexis® - Okapi (gsl.cacm) - TextFixer - DKPro ] --- class: bg-corners, bg4 # English stop word lists .pull-left[ - Postgres - CoreNLP (Acronym) - NLTK - Spark ML lib - MongoDB - Quanteda - Ranks NL (Default) - Snowball (Original) ] .pull-right[ - Xapian - 99webTools - Reuters Web of Science™ - Function Words (Cook 1988) - Okapi (gsl.sample) - Snowball (Expanded) - Galago (stopStructure) - DataScienceDojo ] --- class: bg-corners, bg4 # English stop word lists .pull-left[ - CoreNLP (stopwords.txt) - OkapiFramework - ATIRE (NCBI Medline) - scikit-learn - Glasgow IR - Function Words (Gilner, Morales 2005) - Gensim ] .pull-right[ - Okapi (Expanded gsl.cacm) - spaCy - C99 and TextTiling - Galago (inquery) - Indri - Onix, Lextek - GATE (Keyphrase Extraction) ] --- class: bg-left, bg4 .pull-right.w80[ <div style="font-size: 80pt;"> Stopwords lists are sensitive to </div> <div style="font-size: 50pt;"> - tokenization - capitalization - stemming ] --- class: bg-right, bg4 <div style="font-size: 50pt;"> Non-English stop word lists </div> - Make sure that your list works in the target language - Direct translation of English stop word list will not be sufficient - Know the target language or - Hire consultant that knows the language --- class: bg-full, bg4, middle, center <div style="font-size: 140pt;"> LOOK AT YOUR STOP WORD LIST </div> --- class: bg-corners, bg4 # funky stop words quiz #1 .pull-left[ - he's - she's - himself - herself ] <div class="countdown" id="timer_60c93125" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">00</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">30</span></code> </div> --- class: bg-corners, bg4 # funky stop words quiz #1 .pull-left[ - he's - .orange[she's] - himself - herself ] .pull-right[ .orange[she's] doesn't appear in the SMART list ] --- class: bg-corners, bg4 # funky stop words quiz #2 .pull-left[ - owl - bee - fify - system1 ] <div class="countdown" id="timer_60c92e3e" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">00</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">30</span></code> </div> --- class: bg-corners, bg4 # funky stop words quiz #2 .pull-left[ - owl - bee - .orange[fify] - system1 ] .pull-right[ .orange[fify] was left undetected for 3 years (2012 to 2015) in scikit-learn ] --- class: bg-corners, bg4 # funky stop words quiz #3 .pull-left[ - substantially - successfully - sufficiently - statistically ] <div class="countdown" id="timer_60c92e48" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">00</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">30</span></code> </div> --- class: bg-corners, bg4 # funky stop words quiz #3 .pull-left[ - substantially - successfully - sufficiently - .orange[statistically] ] .pull-right[ .orange[statistically] doesn't appear in the Stopwords ISO list ] --- class: bg-right, bg4 .pull-left.w80[ # General idea about removing tokens We can remove high frequency words (we should look at them, because they might have signal) low frequency (more noise then signal) domain knowledge computational reasons ] --- # Stop word removal using {stopwords} .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_stopwords(text) ``` ] --- # Stop word removal using {stopwords} .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_stopwords(text, stopword_source = "smart") ``` ] --- # Stop word removal using {stopwords} .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_stopwords(text, language = "de", stopword_source = "snowball") ``` ] --- # Stop word removal using {stopwords} .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_stopwords(text, custom_stopword_source = my_stopwords) ``` ] --- # Stop word removal by filtering .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_tokenfilter(text, min_times = 10) ``` ] --- # Stop word removal by filtering .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_tokenfilter(text, max_times = 100) ``` ] --- # Stop word removal by filtering .pull-left.w80[ ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_tokenfilter(text, max_tokens = 2000) ``` ] --- class: bg-full, bg5, middle, center <div style="font-size: 120pt;"> EMBEDDINGS </div> --- class: bg-full, bg5, middle, center <div style="font-size: 120pt;"> turning tokens into numbers </div> --- class: bg-left, bg5 .pull-right.w80[ <div style="font-size: 50pt;"> - Count - tfidf - Embeddings - Hashing - Sequence one-hot ] --- class: bg-corners, bg5 .pull-right.w90[ # Counts ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_stopwords(text) %>% step_tokenfilter(text, max_tokens = 1000) %>% step_tf(text) ``` ] --- class: bg-corners, bg5 .pull-right.w90[ # Counts ``` ## # A tibble: 610 x 5 ## tf_text_ability tf_text_able tf_text_according tf_text_across tf_text_active ## <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 0 7 0 0 0 ## 2 0 0 0 0 2 ## 3 0 4 0 0 0 ## 4 0 0 0 0 4 ## 5 0 2 0 0 0 ## 6 0 3 0 2 1 ## 7 0 1 0 2 1 ## 8 0 2 0 1 0 ## 9 0 3 0 0 0 ## 10 0 2 0 1 0 ## # … with 600 more rows ``` ] --- class: bg-corners, bg5 .pull-right.w90[ # Binary Counts ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_stopwords(text) %>% step_tokenfilter(text, max_tokens = 1000) %>% step_tf(text, weight_scheme = "binary") ``` ] --- class: bg-corners, bg5 .pull-right.w90[ # Binary Counts ``` ## # A tibble: 610 x 5 ## tf_text_ability tf_text_able tf_text_according tf_text_across tf_text_active ## <lgl> <lgl> <lgl> <lgl> <lgl> ## 1 FALSE TRUE FALSE FALSE FALSE ## 2 FALSE FALSE FALSE FALSE TRUE ## 3 FALSE TRUE FALSE FALSE FALSE ## 4 FALSE FALSE FALSE FALSE TRUE ## 5 FALSE TRUE FALSE FALSE FALSE ## 6 FALSE TRUE FALSE TRUE TRUE ## 7 FALSE TRUE FALSE TRUE TRUE ## 8 FALSE TRUE FALSE TRUE FALSE ## 9 FALSE TRUE FALSE FALSE FALSE ## 10 FALSE TRUE FALSE TRUE FALSE ## # … with 600 more rows ``` ] --- class: bg-corners, bg5 .pull-right.w90[ # TF-IDF ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_stopwords(text) %>% step_tokenfilter(text, max_tokens = 1000) %>% step_tfidf(text) ``` ] --- class: bg-corners, bg5 .pull-right.w90[ # TF-IDF ``` ## # A tibble: 610 x 4 ## tfidf_text_ability tfidf_text_able tfidf_text_according tfidf_text_across ## <dbl> <dbl> <dbl> <dbl> ## 1 0 0.0159 0 0 ## 2 0 0 0 0 ## 3 0 0.0106 0 0 ## 4 0 0 0 0 ## 5 0 0.0112 0 0 ## 6 0 0.00559 0 0.00441 ## 7 0 0.00249 0 0.00589 ## 8 0 0.00500 0 0.00295 ## 9 0 0.00697 0 0 ## 10 0 0.00433 0 0.00256 ## # … with 600 more rows ``` ] --- class: bg-corners, bg5 .pull-right.w90[ # Feature Hashing ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_stopwords(text) %>% step_texthash(text, num_terms = 1024) ``` ] --- class: bg-corners, bg5 .pull-right.w90[ # Feature Hashing (1024) ``` ## # A tibble: 610 x 5 ## text_hash0001 text_hash0002 text_hash0003 text_hash0004 text_hash0005 ## <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 -1 -5 1 -1 0 ## 2 0 0 0 0 0 ## 3 0 0 0 0 0 ## 4 0 0 0 -1 0 ## 5 -1 0 0 0 0 ## 6 0 -2 0 0 0 ## 7 0 -1 1 0 0 ## 8 0 -1 0 0 0 ## 9 0 0 0 0 0 ## 10 0 -2 0 0 0 ## # … with 600 more rows ``` ] --- class: bg-corners, bg5 .pull-right.w90[ # Feature Hashing (64) ``` ## # A tibble: 610 x 5 ## text_hash01 text_hash02 text_hash03 text_hash04 text_hash05 ## <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 0 -5 5 0 7 ## 2 0 -2 27 -1 2 ## 3 0 -1 0 0 2 ## 4 -23 -1 6 -1 4 ## 5 -4 0 2 0 4 ## 6 2 -6 5 0 4 ## 7 -1 -3 3 1 2 ## 8 2 -1 3 -3 0 ## 9 0 -2 1 1 2 ## 10 -1 2 7 -2 1 ## # … with 600 more rows ``` ] --- class: bg-corners, bg5 .pull-right.w90[ # Feature Hashing (16) ``` ## # A tibble: 610 x 5 ## text_hash01 text_hash02 text_hash03 text_hash04 text_hash05 ## <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 -4 -9 7 20 39 ## 2 -4 -16 24 3 -2 ## 3 -5 -8 5 -39 35 ## 4 -27 -5 5 17 2 ## 5 1 -5 4 4 2 ## 6 -4 -16 5 24 -19 ## 7 -15 -7 2 16 8 ## 8 -4 1 2 7 3 ## 9 -10 -15 3 66 -12 ## 10 -22 -9 11 13 2 ## # … with 600 more rows ``` ] --- class: bg-right, bg5 # word embeddings <div style="font-size: 50pt;"> - word2vec - fasttext - glove --- class: bg-right, bg5 .pull-left.w80[ # word embeddings (super simplified) They all try to transforming the text to have different points in space mean different words (doesn't have to be words, this can be applied to any type of tokens) ] --- class: bg-right, bg5 .pull-left.w80[ # word embeddings Since we are staying with tabular output we can't use this information to its fullest summing, mean, maxing could be used in a pinch ] --- class: bg-corners, bg5 .pull-right.w90[ # word embedding ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_word_embeddings(text, embeddings = glove_embedding, aggregation = "mean") ``` ] --- class: bg-left, bg5 .pull-right.w80[ # sequence one-hot all other methods we have seen so far are "bag-of-words" sequence one-hot allows up to retain some sort of token-order this could be useful for some DL methods ] --- class: bg-corners, bg5 .pull-right.w80[ # sequence one-hot ```r rec <- recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_sequence_onehot(text) ``` ] --- class: bg-corners, bg5 .pull-right.w80[ # sequence one-hot ```r recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_sequence_onehot(text) %>% prep() %>% juice() %>% select(1:5) ``` ] --- class: bg-corners, bg5 .pull-right.w80[ # sequence one-hot ```r recipe(~ text, data = animals) %>% step_tokenize(text) %>% step_sequence_onehot(text) %>% prep() %>% tidy(2) %>% slice(10406:10415) ``` ] --- class: bg-right, bg5 .pull-left.w80[ # Interpretations There is a lot of talk of algorithmic bias Much of this is related to the many advances to large language models A general modeling tip is typically to start simple with a baseline and then build up Benefits of using these count based methods is that they are quite easy to inspect ] --- class: bg-right, bg5 .pull-left.w80[ # Interpretations This can be passed into topic modeling, supervised modeling the steps you took along the way will influence what type of model works better look at the models ahead, many of these methods produce sparse and correlated data ] --- class: bg-corners .pull-left[ .center[  ] ] .pull-right[ <br> <br> <br> <div style="font-size: 70pt;"> smltar.com </div> More depth and examples focused on supervised learning Available for preorder now ] --- class: bg-corners, bg5, center, middle # Thank you! ### <i class="fab fa-github "></i> [EmilHvitfeldt](https://github.com/EmilHvitfeldt/) ### <i class="fab fa-twitter "></i> [@Emil_Hvitfeldt](https://twitter.com/Emil_Hvitfeldt) ### <i class="fab fa-linkedin "></i> [emilhvitfeldt](linkedin.com/in/emilhvitfeldt/) ### <i class="fas fa-laptop "></i> [www.hvitfeldt.me](www.hvitfeldt.me) Slides created via the R package [xaringan](https://github.com/yihui/xaringan).